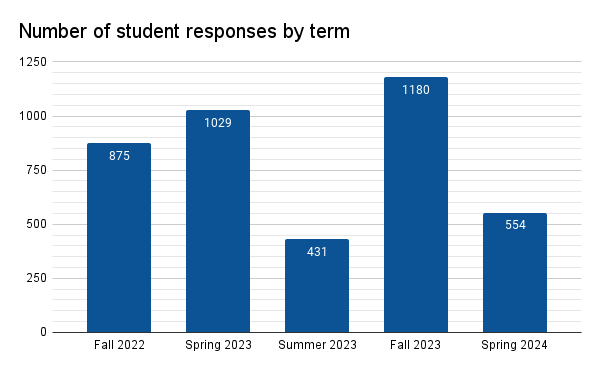

Student evaluations spring 2024

Student evaluations of instructor, course, and course materials provide guidance for the institution on areas of relative strengths and areas where there may be room for improvement. Bearing in mind that old data is not usefully actionable data, the intent of this report is to convey broad themes emerging in the evaluations to decision makers as rapidly as possible.

This report presumes familiarity with the student evaluations form in use at the institution. The responses were converted to numeric values:

Strongly disagree: 1

Disagree: 2

Neutral: 3

Agree: 4

Strongly agree: 5

The differences are small between the terms. Due to the large sample sizes the differences are technically statistically significant, but the effect sizes are small to very small. At best the data only hints at the possibility that student ratings may be slipping term-on-term, year-on-year.

As noted in the past, students tend to respond with agree or strongly agree. Teasing information out of means that are similar requires the realization that an excess of agrees relative to other ratings in the section represent a downgrade for that item. The following report looks to highlight what are small but potentially real differences.

Instructor evaluations

As in prior terms, the first item rating the instructor's overall effectiveness was lower than the other metrics in this section of the student evaluations. The reason for this remains unknown.

In terms of t-scores, the first item significantly underperformed relative to the other items. Areas of relative strength included awareness of the student learning outcomes and instructor's knowledge of the content area. Area of relative weakness included maintaining regular contact and timely feedback. An area of strength was the perception that instructors are knowledgeable. Students were also well aware of the SLOs in the course.

Noting that hybrid refers to science courses with an online lecture and residential laboratory, the above chart suggests that the differences in delivery mode with respect to regular contact are small. One might expect that students will rate regular contact higher for courses with a residential component than for online courses. That said, instructors in online courses should continue to seek ways to improve regular contact and engagement. One recommendation would be to encourage online faculty to utilize the Canvas Teacher app on their mobile devices.

Instructor ratings have slipped across all areas across the past five terms. Regular contact and timely feedback remain challenging for instructors. Perhaps student expectations of feedback response times has shifted among students.

The trends for the past five terms are slightly negative with the negative trend, when considered across all criteria, being significant [Edited 5/3/2024]. Regular contact stands out as the most negative value.

Note that the lead overall effectiveness criteria as that has behaved anomalously for the past five terms. Students consistently downgrade this criteria below their ratings in the other areas.

Course evaluations

On metrics evaluating the course, there was relative weakness in whether the learning outcomes helped the students focus in the course. Areas of strength including the perception that the course was a valuable learning experience, the syllabus was clear and complete, and expectations were clearly stated.

Noting the low rating for "The SLOs helped me focus in this course" and the high rating for "awareness of the SLOs" in the prior section, I am left wondering if the problem isn't the criteria. For example, knowing that "Students will be able to make basic statistical calculations" doesn't tell a student how to focus in a course, not from the student's perspective where they are working on assignments, taking tests, giving presentations. SLO mastery is a meta-result, not something that tells a student what to study on any given Monday.

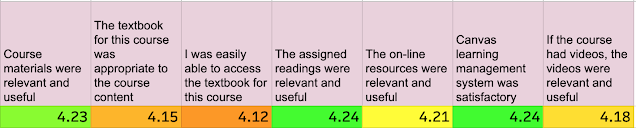

Course materials evaluations

In the course materials section the lowest rating was for textbook accessibility along with whether the textbook is appropriate to the course content.

Textbook accessibility and appropriateness has been an area of relative weakness for the past five terms. While both show a negative trend in the ratings across the five terms, the appropriateness of the text is falling at a faster rate than other course material metrics with the exception of whether the videos shown were relevant. Ease of access to textbooks remains a challenge for the students and the institution

In terms of materials, an area of relative strength was the Canvas learning platform. Canvas has been an area of strength in past terms as well. Students react positively to Canvas. I have to suspect that if one differentiated between well set up courses and poorly set up courses, there would be an even stronger positive reaction to the well set up courses.

Overall

As of 5/7/2024 with 644 evaluations submitted, the means for each section above are each close to the overall mean of 4.26.

For all evaluations, the mode and median are a 5, a mean of 4.26, and the standard deviation is one. The standard deviation reflects that students tend to mark fours and fives, sometimes a three, but far less commonly a one and even less so a two.

Some of the ones may be student confusion. Some students mark ones for all sections and then write glowing, positive comments about the instructor and the course. That students so uniformly mark fours and fives is one reason for the use of modified t-scores in the charts above. A student choosing a four is making a statement by doing so. Thus small differences in the means can be argued as having meaning.

Other metrics

Student evaluation responses have come in from all five campuses that participated in the student evaluations.

The responses include students in residential, online, and hybrid courses.

As seen in prior evaluations, students still prefer in class lectures. In the past, textbooks came in second as the preferred learning material, with online presentations in third. This term online presentations edged out textbooks but the difference is not significant.

The area marked "Online" is truncated: those are students who prefer online synchronous videoconferencing as a learning mode, such as Zoom or Google Meet. This is not a preferred learning option among the college's students.

Although in fall 2023 the students in online courses preferred in class lectures, this spring a prefernece for online presentations exceeded in class lectures by a small margin. Just over 25% of the online students express a preference for in class lectures.

Residential students strongly prefer their in class lectures. Of note is that in hybrid courses, courses which, for example, may have an online lecture and residential laboratory section, students prefer an in class lecture.

Students in online courses strongly prefer asynchronous online courses to synchronous online courses. One of the strengths of online education is providing learning opportunities to students on a schedule that the student sets, and this happens best in an asynchronous online course.

Greetings from Yap, Thank you Professor Dana for compiling these data. These are important information to know. I am intrigued by the fact that these semester. The amount of poll results have dropped significantly. Is this due to enrollment or student access to the survey? I also don't understand the "Physical Campus associated by the student" that is listed under "Other metrics". Is this the campus that the student has replied from, or where the student is taking face to face classes? All in all. I want to thank you for sharing. This is the first time that I have received information from the survey conducted college wide. Thank you again and have a great day!

ReplyDeleteI do not know what might be causing the negative trend in the overall ratings average over the past five terms. Whether this is something happening at the college or is something shifting and changing in the students themselves is unknown to me. That would take focus groups and further research. This is not an effect of declining enrollment and is not likely to be an access issue. The physical campus is the campus the student is registered on and is able to take face-to-face classes on. Thanks for the good words! I have been to Yap and have always enjoyed working with the campus there and the excellent team on that campus.

ReplyDelete