Students in courses at the college anonymously submitted responses to a student evaluation survey. Students were instructed to fill out the survey once for each course they were in. The data below derives from multiple choice items on 415 surveys submitted by students. For the 415 submitted surveys, there are 10224 individual rating responses on 25 metrics. The metrics were in three broad categories: instructor evaluation, course evaluation, and course materials evaluation.

Distribution of ratings given by students

Students tend to Agree (4) or Strongly agree (5). The overall average for all metrics was a 4.42 with a standard deviation of 0.99. The data is distributed closely around the mean. In general, students tend to favor agreeing with statements rather than disagreeing. This makes determining areas of strength and weakness harder to discern.

To better identify differences in the metrics, areas of relative strength and weakness, the horizontal access for the charts below is essentially a form of a t-statistic. The value calculated is the number of standard errors of the mean above or below the overall mean for that particular average. Areas of strength are denoted by green bars and extend above positive two standard errors on the right. Areas of relative weakness are denoted by red bars and extend below negative two standard errors on the left. Brown bars are areas close to the mean for that section of the survey.

Instructor evaluation

- Overall, this instructor was effective

- The instructor presented the course content clearly

- The instructor emphasized the major points and concept

- The instructor was always well prepared

- The instructor made sure that the students were aware of the Student Learning Outcomes (SLOs) for the course

- The instructor gave clear directions and explained activities or assignments that emphasized the course SLOs

- The instructor initiated regular contact with the student through discussions, review sessions, feedback on assignments, and/or emails

- The instructor presented data and information fairly and objectively, distinguishing between personal beliefs and professionally accepted views

- The instructor demonstrated thorough knowledge of the subject

- I received feedback on assignments/quizzes/exams in time to prepare for the next assignment/quiz/exam

Instructor evaluation relative results

The first metric performed unusually and, to some extent, inexplicably low relative to the other metrics in the instructor evaluation section. The other metrics do not suggest a clear explanation for why the average for the overall rating is that far below the mean. Perhaps when considering the instructor on an "overall" effectiveness basis, the students were more comfortable giving a rating of "agree" than "strongly agree." This result is markedly different from the

evaluations in spring 2022.

Instructor strengths were seen in the areas of preparedness and awareness of student learning outcomes. Timely feedback was an area of relative weakness but has improved since spring 2022. Faculty may be adjusting to the nature of online education where there are functionally no days off. Students submit work around the clock seven days a week, and timely corrective feedback is crucial to learning and engagement.

Instructor evaluation averages by metric (click on images to enlarge)

The low relative value for the first metric, 4.36, can also be seen in this table. Note that this is still between agree and strongly agree. With 63% of the ratings being a 5 and 88% being a 4 or a 5, the averages will usually be between four and five. Looking for strengths and weaknesses means looking for small differences in the averages. The averages on each metric also provide a reference point when looking at individual faculty averages.

Individual instructor averages for an instructor with both a residential and an online course

The above individual instructor ratings suggest relative weakness for this instructor in the area of presenting data fairly and objectively, distinguishing between personal beliefs and professionally accepted views. However, the average on this metric for the instructor is 4.75 while average for all evaluations on this metric is a 4.43. The faculty member is performing above the overall average on this metric, so while this may be an area in which the faculty member can improve, the faculty member is already performing above average on this metric. On that particular metric the faculty member is performing roughly six standard errors of the mean above the average on that metric. Given the tendency of measures to tend to return to longer term means, improving this value may not be possible.

Where a faculty member is below the overall evaluation averages for a metric, then this is an area in which the faculty member may be able to improve.

Course evaluation

- Overall, this course was a valuable learning experience

- The course syllabus was clear and complete

- The student learning outcomes were clear

- The SLOs helped me focus in this course

- This course delivered online was equal or better value than if I had taken it face-to-face

- Assignments, quizzes, and exams allowed me to demonstrate my knowledge and skills

- The testing and evaluation procedures were fair

- There was enough time to finish assignments

- Expectations were clearly stated

Course evaluation

The course evaluation section was impacted by a very low relative score for the statement "This course delivered online was equal or better value than if I had taken it face-to-face." This question was retained from the spring 2022 student evaluation when the form was deployed in online courses. This summer the form was also deployed in residential courses. For the fall term this metric should either be rewritten or removed. The weak performance on this metric will also show up in the individual faculty evaluations. What, if anything, this question meant to a student in a residential course is unclear.

With the statistically problematic number five removed, the areas of relative strength and weakness for courses becomes clearer. The courses are seen as valuable, the syllabi are generally clear, and the expectations are clear.

The student learning outcomes did not help students focus in their course. The students did not as strongly agree that the assignments, quizzes, and tests allowed them to demonstrate their knowledge and skills. Test and evaluation procedure fairness also did not get as strong a rating as some of the other areas.

Course evaluations averages (click to enlarge)

Again, when looking at areas of strength and weakness, one must bear in mind that overall students agree with the statements. Even the area of unusual weakness is still an area the students agree with, they just do not strongly agree with the statement. The relative strengths shown in the charts above are only intended to help show where improvements are more likely to be made, where support and training might be delivered.

Individual instructor averages for an online course

Note that the colors displayed on a faculty report are also relative. That the above instructor has more areas of yellow than the overall averages does not mean the instructor's values are below the green values seen in the overall averages. For each instructor the colors are relative to their own range of scores. This is intended to highlight areas where they may be able to improve.

Although the individual instructor above has averages above the overall average for each metric, this instructor also received a relatively lower score for the value of the online course versus face-to-face.

Course materials

- Course materials were relevant and useful

- The textbook for this course was appropriate to the course content

- I was easily able to access the textbook for this course

- The assigned readings were relevant and useful

- The on-line resources were relevant and useful

- The course online grade book was satisfactory

Course materials

For the six statements on course materials, students concurred that the readings were relevant. Areas for possible improvement would be providing easier access to the textbook and working on improving the relevancy of online resources.

Course materials averages

Again, the averages remain between agree and strongly agree, the differences in these metrics are small. All of the averages are below the overall mean of 4.41 which leaves open the suggestion that perhaps as a category there is room for improvement.

Individual instructor course materials averages for an online course

That there may be room for improvement is suggested by the above averages for a fully asynchronous online course where the textbook was not only online, but each chapter of the text was available as a page in Instructure Canvas. These pages are in the native format of pages in Canvas which optimizes their display on both desktop and mobile devices including when accessed from the Canvas Student app. While this is not possible for most courses, the values provide an indication of what is possible when access to the text book is as frictionless as possible.

The current iteration of the student evaluation form could use updating to also specifically ask whether instructional videos were relevant and useful. In the course for the instructor above the primary means of delivering material was through explanatory videos with the text book as a secondary resource.

One of the questions on the student evaluation asked "Please check which mode of delivery you prefer." When the ease of access to the textbook question is split by the preferred mode of delivery, the two means remain about the same. Note that this is preferred mode of delivery, and not actual mode of delivery. Actual mode of delivery is more difficult to determine as students did not always include a section number. In the spring run the section number was requested as a separate value, but based on the data this was confusing for the students. There are students who do not know the course number of the course they are in, let along the section number.

For reference, preferred mode of delivery item was answered with 60% of the students preferring face-to-face and 40% preferring online classes.

Student response habits

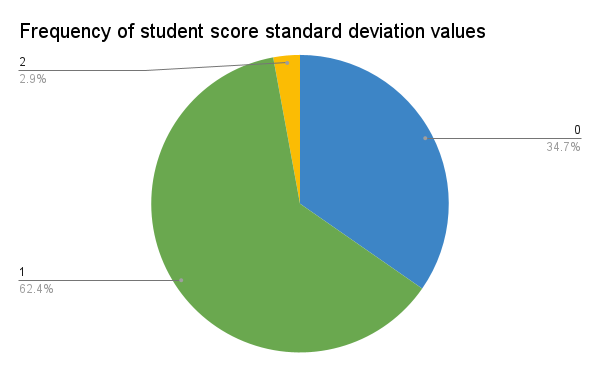

When viewing the raw data one often sees students who have voted a "straight ticket" checking the same number for every metric. The above chart shows the distribution of the standard deviations for the students. Thirty-five percent of the students answered with the same answer for every metric. Most often this was all 5s or all 4s. In some instances, however, one can see students who checked all 1s. These generate a standard deviation of zero, hence the label on the chart.

Students who tended to alternate between two numbers, or only rarely choose a different number, comprised 62% of surveys submitted. These are students whose scores on the 25 metrics generated a standard deviation of less than one.

Only three percent of the students had a standard deviation of more than one. These are students who employed a wide range of answers from strongly disagree to strongly agree.

Campuses confusion

Which campus is the course on?

The question "Which campus is the course one?" was confusing for the students.

The above chart is for a single section of an online course which had students on Yap and Pohnpei in the course. The expected answer would have been that the campus is the "Online" campus for all students, but only half of the students chose this. The other half of the students must have identified the campus from which they accessed the course.

Comments

Post a Comment