Midterm update of preparedness and previous polls

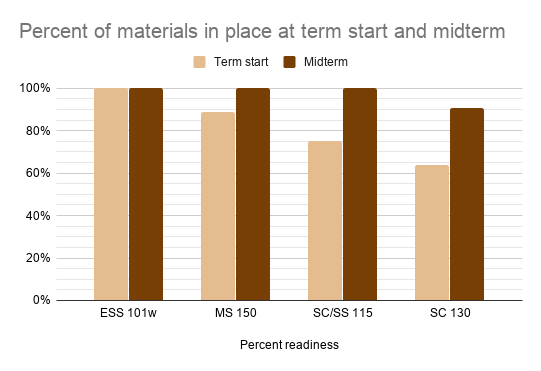

At term start my target was to have 80% of my materials - assignments, quizzes, tests, presentations - in place for all four of my courses. I was keenly aware from the summer that once the term began the bulk of my time would be spent providing student support and that I would no longer have significant amounts of time to prepare and deploy material for my courses. My goal was to have enough of a head start that the students would not catch up to my preparations prior to the end of the term. Thus far I have succeeded, but my pace of preparation has been slower than even I anticipated. I had expected to wrap up course development by midterm, but in one course I am still developing materials for deployment in November.

That said, with three courses complete, I am now confident that materials will be in place for students ahead of their arrival at that point in the curriculum. The need to be 80% ready on day one certainly seems supported in my mind by my own experience. I am also too keenly aware that many faculty were not 80% ready on a day one and are finding themselves continuously scrambling to prepare materials while serve student needs in the current material.

The way in which Schoology Update polls work is that students can go back and change their vote at any time. I have seen clear indications of students going back and changing a vote, thus the poll is a dynamic snapshot at a moment in time. At midterm there are remain faculty who take more than a week to respond or do not respond to messages and emails.An update of the most recent poll does not show changes in the responses, but there is an increase in withdrawals. Two of the withdrawals were student initiated. The rest were the result of non-contact from students. Reaching out directly and through counseling did not produce a response from these students. Once non-participation reaches roughly four weeks, essentially a month, I can no longer document "substantive and regular" interaction - especially the "regular" portion of the double test. At that point, if I have no other information to work with, I go ahead and withdraw the student.

I continue to remain concerned that the current learning management system does not provide sufficient and appropriate administrative insight into what is happening out in the virtual classrooms - both what is going right and what is going wrong. I continue to hope for the opportunity to pilot test other options that might be more attuned to the needs of higher education. Options that would properly protect faculty academic freedom while balancing the need of supervisors and administrators to know what is happening for students out in the virtual classrooms.

I would also hope that faculty see themselves as the learning assessment researcher in their own classroom and use what few tools we have to better understand how to assist our students in this new environment.

Comments

Post a Comment