Tests don't test except when they do

With the transition to online courses many instructors seek to recreate the conditions of residential class quizzes, tests, and examinations. Instructors may deploy time limits to mimic residential class assessments, deploy lockdown browser software such as Respondus, or require students to sit the assessment while being monitored in real time by video link.

Each escalation in monitoring is an attempt to address a failing in the prior level. Lockdown browser? Student accesses a second device. Hence the step up to video monitoring to see that the student never looks away from the screen. Which can lead to students being unable to go to the restroom when they need to do so.

A colleague once said to me, "In online education, tests do not test." Trying to recreate the traditional in-class test experience in an online course is the proverbial attempt to put new wine in an old wineskin. I am reminded of the Princess Leia quote, "The more you tighten your grip, Tarkin, the more star systems will slip through your fingers." One has simply created a prisoner in a prison situation where the student inmates seek to find ever more clever ways to circumvent whatever guardrails the instructor has put in place.

I went the other direction. Tests in my course have effectively no time limit and no other restrictions of any kind other than the test allows only a single attempt, once and done. As I say to the students, "The test is open book, open notes, open video, open Google, unmonitored, almost no time limit (a week to complete the test), perhaps even open neighbor." I use other elements in my courses to provide more authentic assessment of what the students know, can do, and value. Tests are just one part of a larger set of assessment tools and are not the be all and end all of assessment in my courses. Just one small piece.

While I can be heard telling colleagues that "tests don't test" and that online tests are rarely "authentic forms of assessment" (only a few careers involve regularly taking and passing online tests), I have also learned that "online tests don't test except when they do."

My expectation is that there will be students who will look up answers, collaborate with friends, and turn in stellar tests. And in some sense I rather hope that they do this. My experience is that students in an online course still find ways to fail an online test.

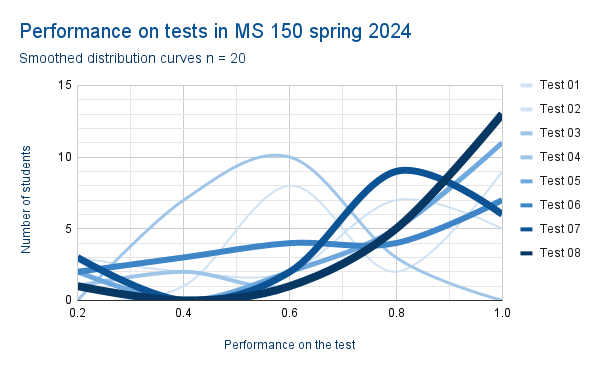

The above chart depicts the distribution curves for eight tests in MS 150 Statistics online section. The thicker, darker curves are tests later in the term. The frequency intervals binned the data with class upper limits at 20%, 40%, 60%, 80%, and 100%. This was necessary to smooth the data out sufficiently for the eight tests sufficiently to reveal the distribution patterns across all eight tests.

Comments

Post a Comment